At dinner the other day, my daughter was explaining a complex probability question in a recent mathematics test. My wife asked if she had the test paper, and suggested she put into ChatGPT to get help.

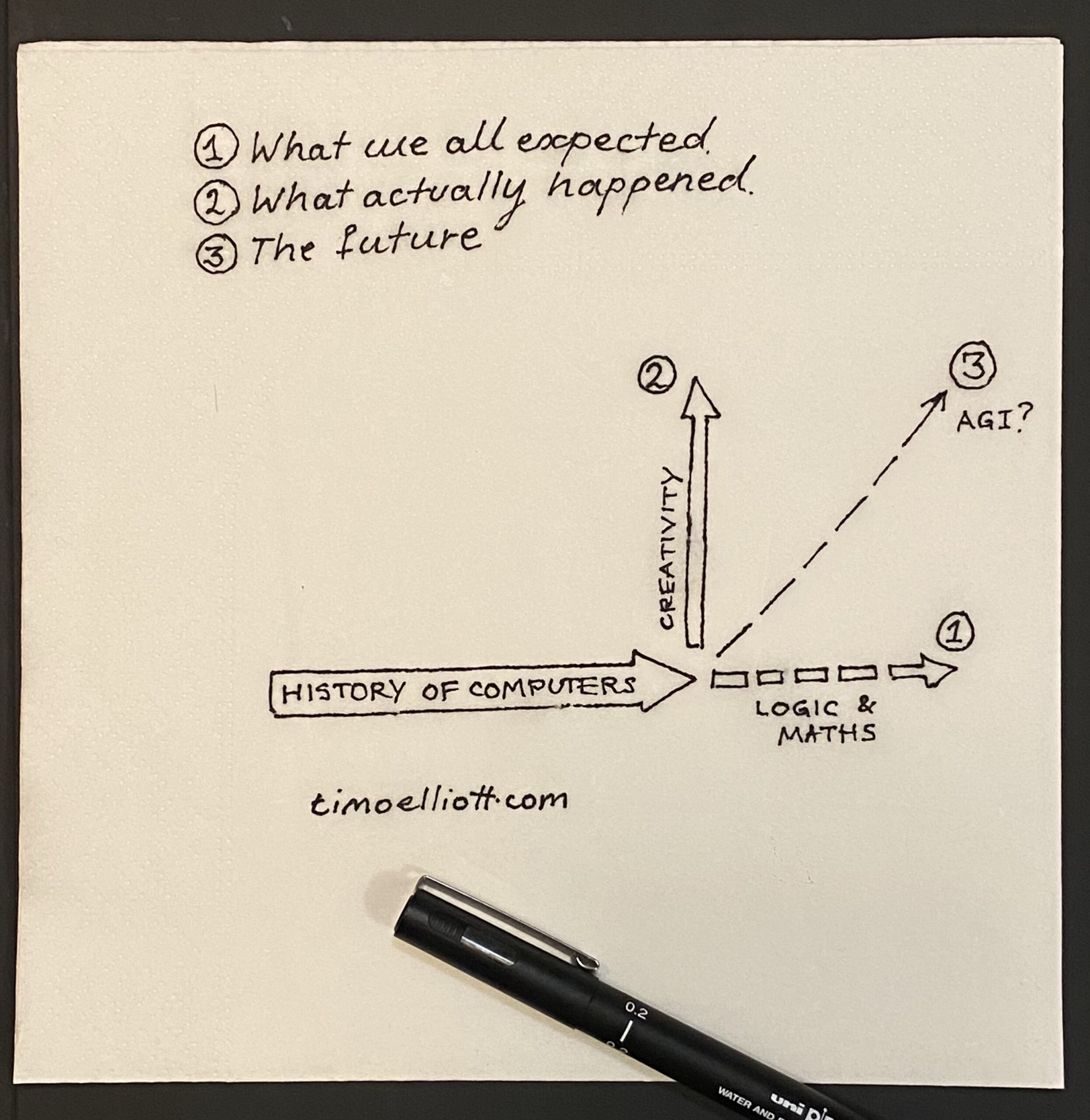

I’m sure everybody can relate with that idea. After all, the entire history of computing has told us that computers are good at maths and logic. So when we hear that artificial intelligence has had a considerable breakthrough we all automatically assume that it’s in the same direction.

But that’s what’s so confounding about the latest large language models. They’re generally terrible at mathematics and struggle with logic problems that children can easily manage.

Instead, what they’re really good at is something we think of as much more human: creativity. They are deeply untrustworthy when it comes to facts and logic, but great at brainstorming and new ideas.

This wave of computing and AI is different. Throw out your mental model of software that does what you tell it to do. Instead, you have to talk to these models as if you’re trying to communicate to an alien species. They respond better to coaxing and threats than they do to orders and commands. Studies have shown that they give better answers if you are polite, promise to tip, or if you say that your life depends on it. It may even get sluggish just because it’s December!

The good news is that the next wave will apparently be back in line with our initial expectations. Researchers are working on models that are dramatically better at step-by-step reasoning, and can work their way through complex mathematics problems like my daughter’s probability test (e.g. the rumors swirling around OpenAI’s Q* or the student math example from Google’s Gemini Ultra).

That really will open up the opportunity space for how useful these technologies are in the real world, and get us a lot closer to the holy grail of artificial general intelligence (AGI).

There’s always lots of debate about whether computers are “really” intelligent and can “actually” think. But as Alan Turning said a long time ago, that’s a question that is “too meaningless to deserve discussion”.

I personally think humanity has too much pride to ever admit that computers are intelligent, even as computers now regularly beat us on almost every standard we can think of for measuring it.

But I think we’ve already passed a real watershed towards AGI. Getting the most out of computer now requires treating them as if they were intelligent—and flawed, just like our human colleagues. And from now on, they’ll just get steadily smarter.

Comments

One response to “What people get wrong about the new AI”

Great post as always Timo – my feeling is that everyone is fascinated by the technology and the potential first and forgets about the other side of the equation – the end-user and his (human, so human) expectations. Digging on this vein, I recommend this great article which was an eye-opener for me – https://kozyrkov.medium.com/whats-different-about-today-s-ai-380569e3b0cd.