At the Gartner Business Intelligence and Analytics Summit in Barcelona, there was near universal agreement about three things to do with “big data”

- It’s an awful term (but we’re stuck with it)

- Whatever it means, it’s a big deal, and requires big changes to traditional information infrastructures

- It will result in big new business opportunities

“Big Data” is a terrible term

Gartner analyst Doug Laney first coined the term “big data” over over 12 years ago (at least in its current form – people have been complaining about “information overload” since Roman times). But the term’s meaning is still far from clear and it was nominated the #1 “tech buzzword that everyone uses but don’t quite understand” (followed closely by “cloud”).

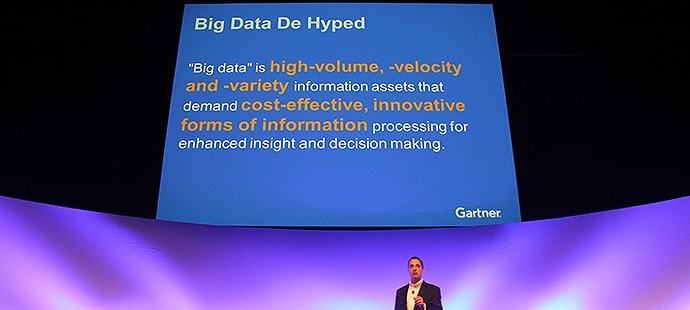

When using the term, Gartner usually keeps the quote marks in place (i.e. it’s “big data”, not big data). Here’s the definition provided by analyst Ted Friedman to “de-hype” the term during the summit keynote:

“Big Data” are high volume, velocity and variety information assets that demand cost-effective, innovative forms of information processing for enhanced insight and decision-making”

Analyst Donald Feinberg warned people that “talking only about big data can lead to self-delusion” and urged people not to “surrender to the hype-ocracy.” He left left no doubt over where he stood on the use of the term: “Big data doesn’t mean MapReduce or Hadoop. Big data doesn’t exist, it’s meaningless, it’s ridiculous…” The audience started applauding, to which he replied: “Why are you clapping?! Why do you all fall for it? Why do the vendors do it?!

As SAP’s Jason Rose? put it “how can we demystify this? …easy, drop the ‘big’. Data has always been the key challenge in BI”.

For what it’s worth, here’s my tongue-in-cheek definition, and it’s worth noting that big data is quickly becoming the default term for what we used to call analytics or business intelligence.

But Big Data is a Big Deal

Despite the problems, Doug Laney noted that big data the most-searched-for term on Gartner.com. Why is it so popular? Maybe because it’s so nebulous that people want to check if they have understood it. Or maybe because there’s no other more precise term to indicate the new analytic opportunities. And maybe because “hype has a value” as Ted Friedman put it: big data has proved to be a new opportunity to talk to business people about the power of analytics, and because everybody’s searching for it, vendors would be crazy not to include it in their marketing.

Conference attendees generally believed that the biggest opportunity for big data analysis was new insights from “dark data” that lies unused within organizations today. Gartner highlighted the dangers of implementing shiny new big data technology, separate from existing analytics infrastructures: “Do not make your big data implementations siloed. Make them part of the overall strategy for BI.” said Ted Friedman. “Link to stuff you are already doing. Don’t make big data a standalone thing. And don’t feel like you’ve got to go out and buy a whole new technology stack.”

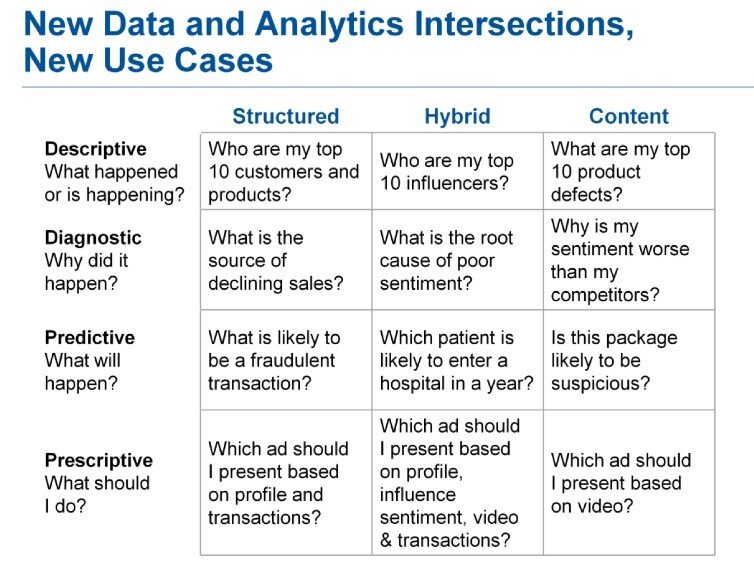

Analyst Rita Sallam, in a session on data variety, gave some examples of the new opportunities:

Some of Gartner’s public predictions related to big data:

- By 2015, 65 percent of packaged analytic applications with advanced analytics will come embedded with Hadoop.

- By 2016, 70 percent of leading BI vendors will have incorporated natural-language and spoken-word capabilities.

- By 2015, more than 30 percent of analytics projects will deliver insights based on structured and unstructured data.

But What Does It Mean For The Business?

Donald Fienberg: “Realize that big data is not about doing ‘more’ of the same thing – it’s about doing things differently”. “The major opportunities for big data are around ways to transform the business and disrupt the industry” said Doug Laney. These included radically changing existing business processes, introducing new, more-personalized products and services, and “answering chewy questions that weren’t possible before.” Some examples:

- NetFlix did deep analysis of their viewers’ preferences, and used that to craft the new “House of Cards” TV series – a $100M investment

- New financial lenders are using big data to find untapped banking opportunities – including lending scores based on what you say on social media

- Passur uses big data to provides real-time monitoring of air traffic, to potentially save millions of dollars per year. Today, pilots’ estimated times of arrival are off by more than ten minutes ten percent of the time, and five minutes 30% of the time – knowing exactly when the planes will arrive means more automation, better operating efficiencies, improved security, etc.

- Enologix analyzes the chemical composition of new wines to predict wine spectator score, and offer advice on how to improve the score

- Dollar General, Kroger and other retailers provide data to partners to analyze, for “free strategic advice”

- Insurance companies are using text mining on previously-unexamined “dark data” on claims forms to sniff out indicators of fraud

Mats-Olov Eriksson of King.com, a Sweden-based casual gaming site (card games, social games, etc), gave an entertaining presentation called “Beyond Big and Data”, hosted by recently-returned Gartner analyst Frank Buytendijk. It was illustrated with more cute kittens, puppies, and otters than I’ve ever seen before in a technology conference!

Mat’s title is “Spiritual Leader, Data Warehouse”, and he explained how the company collects massive amounts of log data about user activities, then uses a combination of Hadoop, traditional data warehousing, and BI cubes to optimize the player experience – and the company’s profits.

The company works with Facebook on games like Candy Crush Saga. The company’s main revenue comes from in-app purchases: for example, you might get five free lives in a game, and if you run out you can either wait for a period, or pay a small sum to play immediately. To try to figure out what options generate the most revenue, and keep players in the game, the company currently processes 60,000 log files per day. The files contain around three billion “events” per day, and the company expects that to rise to six billion by the end of this year. Mats explained that big data is best compared to a software development project, requiring hard-to-find skilled personnel. He characterized hadoop as a “powerful but immature five year old” and explained that they used a MySQL data mart for low-latency access: “HIVE is anything but low latency – it takes fifteen seconds before you even get an error message!”.

One of the recent challenges the company has faced is information governance – a term they hadn’t even used until six months ago. Mats noted that the European privacy laws were by far the “harshest” they deal with.

In a separate presentation, that I unfortunately didn’t attend, Tom Fastner of eBay gave an overview of his company’s analytic systems, explaining that they process more than 100 Petabytes of data I/Os every day, and a “singularity” keyvalue store on Teradata manages 36 Petabytes of data. The largest table is 3 Petabytes / 3 trillion rows, and only takes 32 seconds to scan. Here’s a presentation from 2011 that talks about how eBay combines structured, semi-structured, and unstructured data.

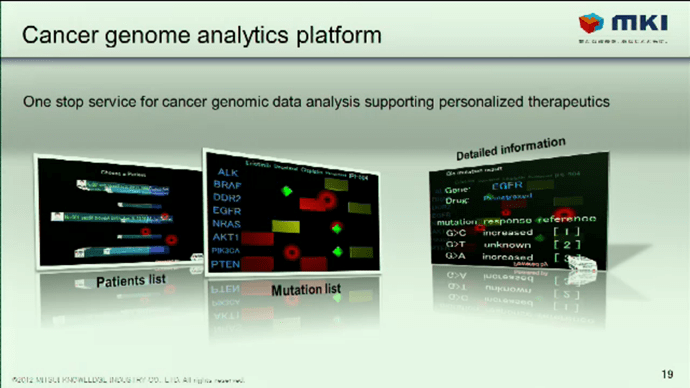

The inimitable Steve Lucas says of big data: “when you see it, you know it”. MKI, a bioscience company is using big data to analyze genome data, using a combination of hadoop, open-source “R” algorithms, and SAP’s in-memory platform, SAP HANA (registration required).

Here’s a summary of the MKI project:

In Conclusion

Big data is a lousy term, but offers big opportunities in return for some big information infrastructure changes. What does the future hold? Let’s hope less of the hype, and more of the business change…